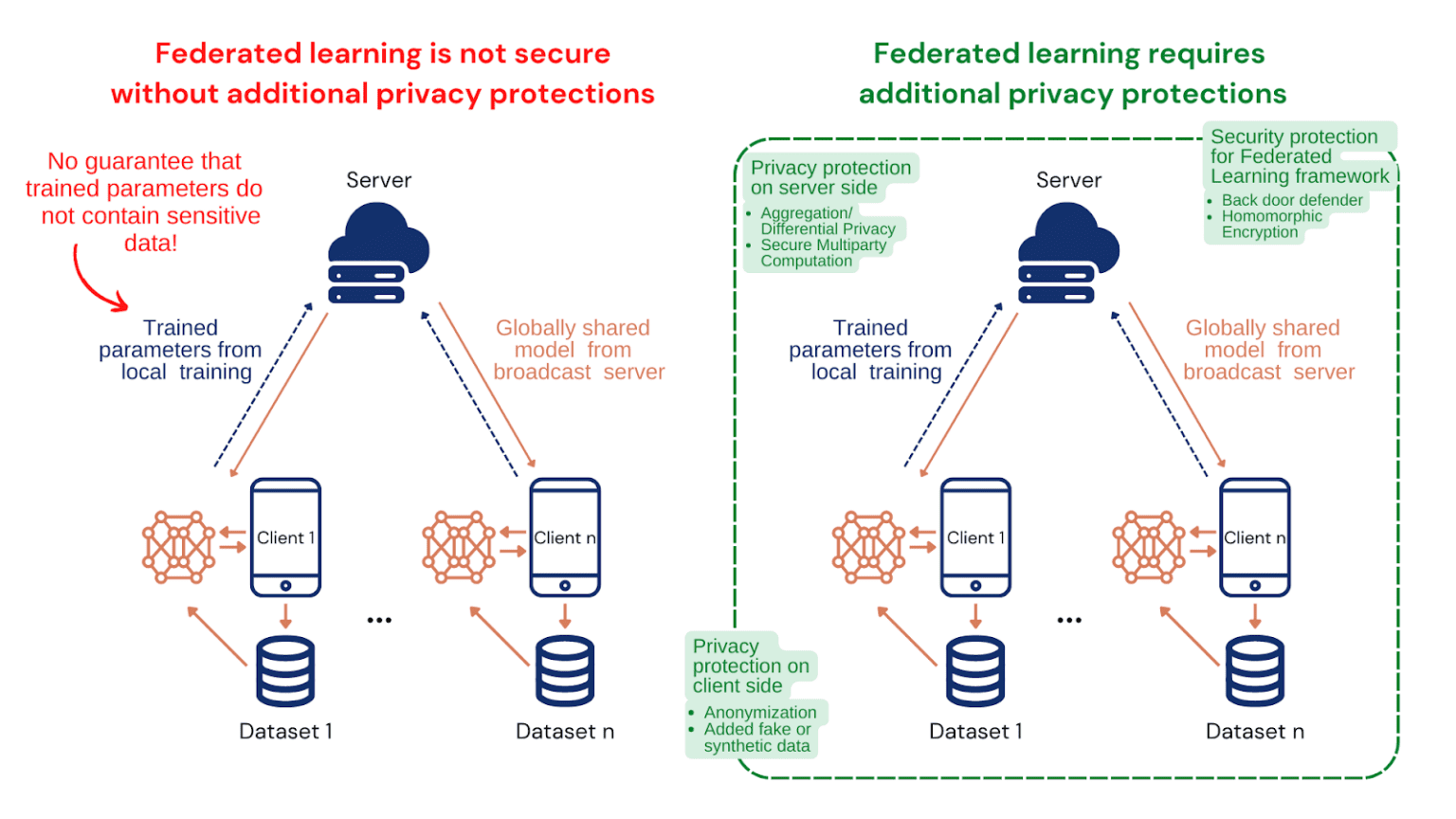

Federated learning is a new technique that has been proposed as a solution to the issues of sensitive data access, transfer, and use for machine learning. But what exactly is federated learning? And why is it not exactly the ideal solution that some think it might be?

What is federated learning?

Federated learning is a system in which a machine learning model, such as a neural network model, is trained in the same location and infrastructure where parts of the data are located. The machine learning model is deployed from the broadcast server to each location where the training data is, it learns from the data, and the trained parameters are transferred back to the central server in a secure location. This process is repeated until the central server arrives at the desired solution.

What is good about federated learning?

If the machine learning model is moving to where the data is, that means that no sensitive data needs to be transferred to a new location, no logistical and technical issues need to be considered, and no slow permit processes need to be endured. Federated learning also helps create common rules and frameworks for data collaborations, requiring all participants to subscribe to the common rules, data licenses, and terms of the collaboration network. In all, federated learning creates a ‘one stop shop’ from the data user’s point of view.

What are the problems with federated learning?

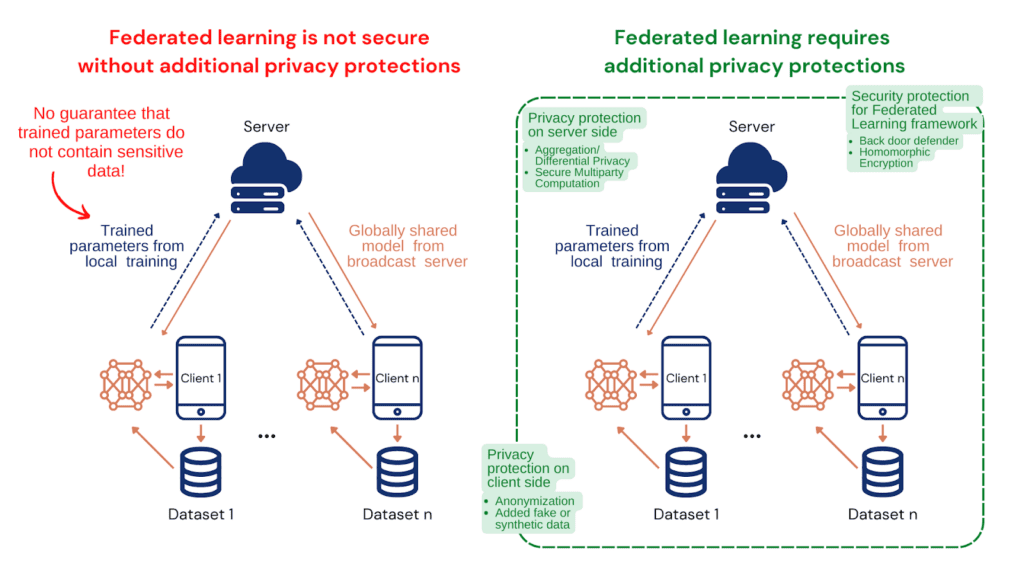

The problem is that federated learning alone does not solve privacy issues. Even if the sensitive data itself is not transferred out of the secure environment, the output of the algorithm could contain sensitive data. Allowing external analysts to run arbitrary code, that is much more complex than simple statistics or averages, creates a high risk of the algorithm having output that contains sensitive, personally-identifying information.

What if we take a good look at the code that analysts are running on our sensitive data? Unfortunately, proving that a function or a program used in federated learning does not leak sensitive information by just looking at the code can be quite challenging, almost impossible. Detecting sensitive information leakage in user provided functions or programs is complex due to factors such as semantic security, which deals with nuanced definitions of what constitutes sensitive information, and potential use of malicious covert channels, which can be used to leak information.

What about inspecting the outputs of the algorithm? From the data owner’s point of view, this is also very difficult to verify. The neural networks or machine learning models are often black boxes, where the output is a series of weights in a format that is not easy to understand or interpret by data owners. In this sense, it is extremely difficult or impossible for data owners to verify that the output of a machine learning model does not contain sensitive personal data or is compliant with privacy legislation.

How can anonymization and federated learning work together?

Anonymizing data before allowing the federated learning algorithm to get to work on the data guarantees data privacy and quality.

Assuming you have an excellent anonymization tool with good quality output, like VEIL.AI’s advanced anonymization tool, there are no downsides to this step. Anonymizing data before federated learning gives verifiable privacy, quality, and correctness; and using a federated learning algorithm afterwards means that the logistics of data transfer and the benefits of common rules and data frameworks can still be enjoyed.

In all, federated learning and anonymization are complementary tools that can work together to ensure smooth data access without privacy risks for ever larger training data and better machine learning models.

Are you interested in Federated Learning with additional privacy protections? Contact us.